The aliens are here and they’re from Silicon Valley

“So, facing possible futures of incalculable benefits and risks, the experts are surely doing everything possible to ensure the best outcome, right? Wrong. If a superior alien civilisation sent us a message saying, ‘We'll arrive in a few decades’, would we just reply, ‘OK, call us when you get here – we'll leave the lights on’? Probably not – but this is more or less what is happening with AI.” - Stephen Hawking, Stuart Russell, Max Tegmark and Frank Wilczek, 2015

“Because we are solving problems we could not solve before, we want to call this cognition ‘smarter’ than us, but really it is different than us. It’s the differences in thinking that are the main benefits of AI. I think a useful model of AI is to think of it as alien intelligence (or artificial aliens). Its alienness will be its chief asset.” - Kevin Kelly, 2017

“If it was a question of humankind versus a common threat of these new intelligent alien agents here on Earth then, yes, I think that there are ways we can contain them but if the humans are divided among themselves and are in an arms race then it becomes almost impossible to contain this alien intelligence.” - Yuval Noah Harari, 2023

1. The alien-AI analogy explained

The analogy of current frontier AI or future artificial general intelligence to an extraterrestrial intelligence has been used in four main subforms.

1.1 First contact as a call for long-term planning on AGI

Pre-ChatGPT one of the popular narratives around AI has been that truly capable systems are still “a long way off”. The analogy of a predicted date of artificial general intelligence in expert surveys with an anticipated first contact with an alien civilization in a few decades was first used in a 2015 op-ed in the Guardian by Stephen Hawking, Stuart Russell, Max Tegmark, and Frank Wilczek. The analogy particularly meant to address this narrative and argue that we need to work on AI safety now and has been particularly echoed by attendants of the Puerto Rico Conference on AI Safety organized by Max Tegmark’s Future of Life Institute, such as Elon Musk, Sam Harris, and Erik Brynjolffson.

One can argue that this analogy was primarily about time horizons across issue areas and a predictable first contact with an alien civilization is a replaceable example of a category of (potential) challenges that require long-term thinking and planning. Indeed, Stuart Russell (2017) himself later replaced aliens with an asteroid, arguing “the right time to worry about a potentially serious problem for humanity depends not on when the problem will occur, but on how much time is needed to devise and implement a solution that avoids the risk. For example, if we were to detect a large asteroid predicted to collide with the Earth in 2066, would we say it is too soon to worry?” Similarly, Max Tegmark has made an analogy to the failures of elites to properly react to a predictable existential threat of an asteroid in the movie “Don’t Look Up” (2021).

“It's life imitating art. Humanity is doing exactly that right now, except it's an asteroid that we are building ourselves. Almost nobody is talking about it, people are squabbling across the planet about all sorts of things which seem very minor compared to the asteroid that's about to hit us.“ – Max Tegmark, 2023

Either way, the specific time horizons mentioned in the analogy have consistently shortened over time as AI timelines have accelerated and are now closer to 2026 than 2066.

So, by now, it is easy to see that AI safety matters even without thinking particularly long-term.

1.2 Aliens as cognitive complements in the vast space of possible minds

A second version of the alien analogy has been championed by Kevin Kelly in 2017 as part of his push against “the myth of superhuman AI” with a long Wired article, followed by a TED Talk, which was widely shared by AI skeptics at the time. The main point of Kelly was that AIs will be specialized and crucially think in very different ways from humans. Hence, humans should not worry about being replaced by AI, rather it is different ways of thinking that complement each other.

Here are some concrete examples of alien AI minds proposed by Kelly:

a mind dedicated to enhancing your personal mind, but useless to anyone else.

an ultraslow mind that appears “invisible” to fast minds.

a mind capable of cloning itself and remaining in unit with its clones.

a nanomind that is the smallest possible (size and energy profile) self-aware mind.

a half-machine, half-human cyborg mind

a mind using quantum computing whose logic is not understandable to us

Kelly’s argument for the diversity of digital minds fits well with some other AI analogies, such as the Cambrian Explosion. However, this subform of the alien-AI analogy has been less prominent than the other subforms. A possible factor for this may be that he has tied it to an “AGI will never happen” argument and that the rapid AI progress since 2017 has reduced the overall size and prominence of this camp in the discourse.

1.3 The unintelligible shoggoth below the friendly mask

The public release of ChatGPT, in late 2022 has created cultural shockwaves. The main innovation that made ChatGPT so transformative was reinforcement learning from human feedback (RLHF). RLHF meant that the AI was rewarded for responses that were rated as helpful, harmless, and honest by human reviewers. As part of this AI learned to avoid giving sexually explicit or racist answers. More importantly, it learned how to give human-like answers rather than just continuing with the most likely next word (for more detail see Is ChatGPT just “autocomplete on steroids”?).

However, clever users soon also found ways to “jailbreak” ChatGPT and to make it say things that its producers did not intend to. Furthermore, the GPT-4 version used by Microsoft for its search engine Bing, started to go off the rails with unhinged and unfriendly answers, including repetitive rants, lying, and threatening to harm users.

All of this has contributed to the emergence of a new meme: the shoggoth. Specifically, a shoggoth with a smiley face became popularized on Twitter and the LessWrong forum in early 2023 to describe the effect of RLHF. The meme has been used by tech CEOs, such as Elon Musk and Alexandr Wang.

What is a shoggoth? Shoggoths are fictional monsters invented by the American horror writer H. P. Lovecraft.1 They were originally created by an ancient alien race known as the Elder Things. The shoggoths were a bioengineered slave race, designed to perform various tasks for their creators. However, the Elder Things have lost control over them. Lovecraft describes them as massive amoeba-like creatures made out of iridescent black slime, with multiple eyes "floating" on the surface. They are "protoplasmic", lacking any default body shape and instead being able to form limbs and organs at will.

What does the mask with the smiley mean? The amount of compute going into pre-training, where the LLM uses unsupervised learning to predict the next word on large swaths of the Internet, is very large. In contrast, the reward model which tells the LLM how to give helpful, harmless and honest answers, is about 60x less compute intense. So, the basic idea is that this form of alignment with human values only works in a superficial way. The LLM learns how to put on a mask with a smiley face, but we don’t understand how it thinks below the mask and sometimes the mask can slip (unsupervised learning: RLHF, shoggoth: shoggoth’s mask). The mask has been compared to “a cherry on a cake” or “lipstick on a pig”.

What does the overall meme mean? According to its creator, the Shoggoth “represents something that thinks in a way that humans don’t understand and that’s totally different from the way that humans think”, adding that, “Lovecraft’s most powerful entities are dangerous — not because they don’t like humans, but because they’re indifferent and their priorities are totally alien to us and don’t involve humans, which is what I think will be true about possible future powerful A.I.”

1.4 The aliens have landed – we need containment now

Presumably inspired by both the Shoggoth meme and the first contact idea, early 2023 has seen a wave of alien analogies from thinkers that have highlighted the risk of losing control in the face of the unmitigated advances and spread of frontier AI. For example, Niall Ferguson wrote the article “The Aliens Have Landed, and We Created Them”.

Yuval Noah Harari has been particularly fond of the alien-AI analogy (2023, 2023, 2023, 2023, 2023, 2023, 2024). Harari focuses on the unintelligibility of AI and the existential risk of losing control. As in the introductory quote above, he has repeatedly stressed the need for humanity to cooperate and unite in face of this existential threat.

Harari also specifically highlights the centrality of narratives to human history and makes the case that AI as a dominant form in producing cultural output is sufficient for losing human self-determination in the long run.

“For thousands of years we humans basically lived inside the dreams and fantasies of other humans. We have worshipped Gods, we pursued ideals of beauty, we dedicated our lives to causes that originated in the imagination of some human poet or prophet or politician. Soon we might find ourselves living inside the dreams and fantasies of an alien intelligence.” – Yuval Noah Harari, 2023

2. Policy implications

Nature of the situation

First contact: The present or near-future is a first contact situation with aliens that are very different from and a lot more powerful than humans.

Stakes

Existential: The survival of humanity and all other species on Earth is at stake.

Policy prescriptions:

Team Humanity: When faced with an existential challenge and contrasted with an alien species as a potential competitor all differences between humans seem minute. It doesn’t matter whether you’re American, Chinese, European, Indian, or Russian, in the grand scheme of things, we all look and think alike. We love our children, breath oxygen, drink water, eat the same dozen crops as staple foods, and are susceptible to the same toxins and diseases. As such, many sci-fi movies show a “whole-of-humanity” approach in which at least the most powerful governments collaborate. Similarly, the development of superhuman AI can be viewed as collective action problem, in which global collaboration on critical elements of frontier AI governance would be desirable. However, as Yuval Noah Harari lamented:

“We just encountered an alien intelligence here on Earth. It didn't come from outer space it came from Silicon Valley and we are not uniting in the face of it we are just bickering and fighting even more.”

Study the aliens: There is an urgent need to better understand the likely intentions, technological capabilities, and potential weaknesses of the “artificial aliens”.

Containment and limited communication: Due to the potential spread of biological or digital viruses and the ease of surveilling, securing, or targeting physical alien visitors would preferably be hosted in a geographically contained area. Similarly, given the high-stakes and the delicate nature of interstellar diplomacy with a significant risk of misunderstandings with existential consequences, there is a preference for deeply thoughtful, politically legitimized, and centralized communication on behalf of humanity. Applying this to AI would mean centralized, intergovernmental advanced AI research in some remote, secured location, where AI is hosted in a sandboxed environment without access to the Internet with tests and assessments by an international team of scientists and a political decision-body for questions of diplomacy and “alien technology transfer”.

Worship (?): This is more speculative, however, if we look at intrahuman first contact cases, we can find examples of elements of the less developed civilization worshipping the more developed arrivals as “Gods”. For example, Aztecs considered that Hernan Cortes may be the returning God “Quetzalcoatl” and cargo cults developed on some pacific islands after US planes dropped supplies on the island during the Second World War. Aliens may be viewed as “Gods” as in the “ancient aliens” conspiracy theory or by the Raëlians. If containment has failed or seems prima facie hopeless due to technological superiority, it seems plausible that at least some elements of humanity would opt to worship the superiority of the aliens in religious terms – maybe hoping that they will share “the secret of cargo” or at least be kinder due to the submissive display.

Chances of success of policy options

Low: Unfortunately, all policy options only have a limited chance of success. Ultimately, humanity is likely deeply outmatched and at the mercy of the aliens if it comes to a physical encounter soon. This pessimism notably includes worship, which arguably has neither helped the Aztecs nor the pacific islanders.2

Moral rightness of policy options

Whatever it takes: The stakes of the survival of humanity and the Terran fauna and flora means that actions to achieve that goal, including massive public resource mobilization, is morally justified.

Dangers associated with a policy option

Lack of communication caution: Given that humans have limited policy options if a superior alien civilization were to visit, it can be dangerous to attract attention by sending deliberate messages into outer space before achieving technological maturity.

Lack of military caution: Given the uncertainty about alien intentions and capabilities, we should likely not start with a belligerent response, unless we have clear evidence of belligerent intentions of the aliens. Especially, as we are likely to have deeply inferior technology.

Lack of containment caution: The main military advantage of a contacted civilization is its initial massive mass and energy advantage. Due to the enormous distances of interstellar travel and the exponentially increasing cost of accelerating mass, any initial alien probe would have to be small. Once aliens have built or taken over local life support systems any fight would be much more lopsided.

3. Structural commonalities and differences

Key Commonalities

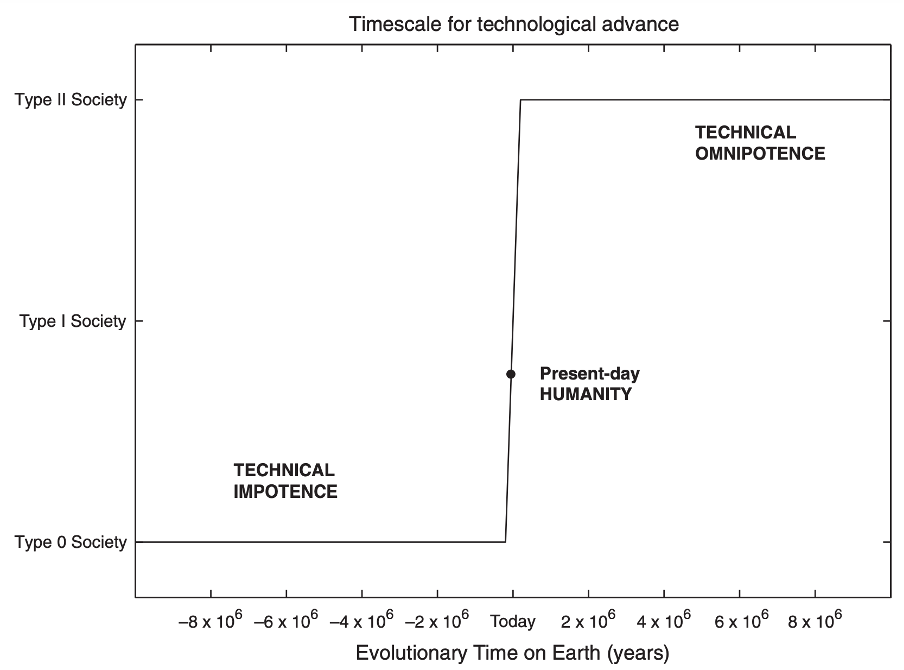

Superhuman power potential: The macro historic context of our time is that we are not in a stable state but a civilizational take-off. We cannot expect this “dreamtime” of exponential growth to continue forever. Yet, the upper limits for civilizational transformation are still dramatically above our current capabilities. Freitas provides a good schematic representation of this using the Kardashev Scale that reflects different levels of energy available to civilizations.

Robert A. Freitas. (1979). Xenology: An Introduction to the Scientific Study of Extraterrestrial Life, Intelligence, and Civilization. We may add some qualifications and highlight the possibilities of setbacks or collapse on the way to technological maturity (Bostrom, 2013, Baum et al., 2019). However, the basic outline of the civilizational take-off as an s-curve (which looks like a step function on evolutionary time scales) is hard to dispute. The way astrobiologists think about aliens, they are either significantly less developed than us (and hence would not be able to intentionally communicate and/or travel across space) or they have likely attained something close to technological maturity. Barring a major catastrophe, we should similarly expect terrestrial digital superintelligence to approach technological maturity at some point in the future.

Digital life: Human popculture generally imagines aliens as an extrapolation of human evolutionary trends, with less hair, less muscles, and much larger brains (presumably these aliens have developed artificial wombs and we hence freed from natural birth constraints on brain size). The “shoggoth” is a more imaginative but still biologically based description of an alien. However, given that digital intelligence has software and hardware advantages over our biological “wetware” we should expect that technologically mature aliens are most likely digital beings. Not to mention that it is a lot easier to ship microchips across the vast emptiness of space than monkeys.

So, in some sense artificial intelligence is indeed the closest equivalent on Earth to how we should expect aliens to look like if they are able to travel to us. In fact, I would love more science-fiction in which *actual* digital aliens hide in giant inscrutable matrices and direct humanity to terraform Earth into a supercomputer.3 However, even if we stick to it being an analogy, there are some interesting secondary consequences from digital life:

a) Diseases: The European arrival in the Americas is the most famous first contact story between previously isolated groups of humans on Earth. Smallpox, brought to the Americas by Europeans, had a devastating impact on the indigenous populations, who had no prior exposure and thus no immunity to the disease. This led to massive population declines and significant shifts in the balance of power, greatly aiding European colonization efforts. However, this was a first contact story within the same species. In a first contact story between life forms with a different substrate it is very unlikely that there could be an astrozoonotic jump in which pathogens endemic to artificial aliens would directly affect the human body or vice versa. In contrast, it seems much more plausible that pathogens could jump from aliens onto our information technology infrastructure and vice versa.

b) Habitat: When humans think of intentionally shaping their planetary environment on Earth and beyond, they think of creating and expanding a habitat suitable to human beings with protective atmosphere, water, agriculture and liveable temperatures. For aliens, a liveable habitat most likely consists out of the electricity grid and data centers. This misalignment in terms of habitats could be a source of potential conflict over resources.

c) Consciousness: While we have some idea of the neural correlates of consciousness in biological neural networks, we are deeply ignorant on if and how this might translate to artificial neural networks or digital life in general. There is a widely held belief that current AI systems are not conscious, but it remains very difficult to assess the likelihood and scale of consciousness in both future AI and digital aliens.

Non-anthropomorphic minds: Like AI, the term aliens include a vast space of possible minds, most of which have been shaped by environments very different from Earth’s natural habitat as well as potentially by different selection pressures.

As Max Tegmark argued: “It’s gonna be much more alien than a cat, or even the most exotic animal on the planet right now, because it will not have been created through the usual Darwinian competition where it necessarily cares about self-preservation, that is afraid of death, any of those things. The space of alien minds that you can build is just so much vaster than what evolution will give you.”

Specific commonalities across that theme include:

a) Non-human strategies: The ability to train ever larger neural networks outpaces the technical ability to understand how they arrive at outputs. There are several examples in the history of technology where the practical knowledge of how to create the technology, has developed before the understandability of the inner workings, in the sense of scientific knowledge of why a system behaves as it does. However, what makes AI stand out as “alien” is its unpredictability, in the sense of the consistency and anticipatability of the system’s performance and outputs. This is particularly true for large neural networks that are trained with reinforcement learning, where the AI maximizes for a reward function. For example, a football-playing robot being rewarded for touching the ball might learn a policy whereby it “vibrates” to touch the ball as frequently as possible, and even if it maximizes the right factors, it might develop strategies that have no human precedent, such as AlphaGo’s “move 37”. So, in the same sense that we should expect to be unfamiliar with some strategies of extra-terrestrial intelligences for achieving goals, we need to take into account that AI systems can find unexpected strategies to maximize a reward set by humans.

b) Non-human failure modes: While AI can find non-intuitive solutions, it can also have non-intuitive failure modes. The classic demonstration of this are adversarial examples in computer vision, which are largely a manipulation of low-level features, which cannot mislead humans but leads AI classifiers to miscategorise objects, such as misreading a traffic signal or seeing a turtle as a gun. Similarly, after the AlphaGo victories against Lee Sedol and Ke Jie, it seemed obvious that the best Go programs would now forever be out of reach for humans. And yet, in 2023, a team of researchers developed adversarial attacks that would not work against a human opponent but with which they could reliably trick and beat a superhuman Go program. A final example comes from the Defense Advanced Research Projects Agency (DARPA) which trained robots with a team of Marines. As reported by Scharre, the robot had been trained to detect the Marines, which were then given the challenge to touch the robot without being detected by it. The marines succeeded but not using traditional camouflage. Rather they used unusual tricks that were outside of the machine’s training regime and would not work on humans, including moving forward with somersaults rather than walking, hiding under a cardboard box, or pretending to be a tree.4 While it is conceivable that future AI will be more robust, such failure modes are a good reminder to not anthropomorphize AI. Aliens would presumably have fewer unexpected vulnerabilities but is something to consider. Fictional examples include the Achilles’ heel in Greek mythology, “Kryptonite” in DC Comics or the Three Body Problem saga, in which extra-terrestrials evolved with transparent brains and hence never developed the ability or even the concept of lying.

c) Modularity and bandwidth of sensors and effectors: The “shoggoth” meme is an interesting contrast to the popular imagination of humanoid robots as the future of AI. In some sense, the analogy to an amorphous alien that can shift its shape and have hundreds of appearing and disappearing eyes probably captures the sensors and effectors of future digital superintelligence better than the traditional sci-fi trope of anthropomorphic, embodied intelligence. While human minds are capable of temporarily extending themselves with a mind-numbing variety of digital and physical tools, the embodiedness of humans provides fixed upper limits.

In contrast, I think it’s plausible that a superintelligence can effortlessly migrate from GPU to GPU, create or destroy a lot of copies of itself based on demand, and spread its attention on vast networks of digital cameras as its “eyes” and effectors as its “tentacles”.

China’s “Skynet” network of facial recognition cameras already includes more than 700 million cameras as “eyes”.

You can’t copy and scale a human to talk to the 100 million users of ChatGPT.

Key Differences

Alien but not extraterrestrial: The biggest and most obvious structural difference between an extra-terrestrial intelligence and advanced AI is its origin. AI has not emerged autonomously from humanity in a distant corner of the galaxy and now arrives on Earth at a fully developed stage and at a clearly defined date. Instead, AI has been created by and for humans on Earth. Surprisingly this disanalogy even precedes the positive analogy in the case of AI and aliens. Specifically, the phrase that “AI is not an alien invasion” has been repeated over and over as a talking point by futurist and inventor Ray Kurzweil for decades (1997, 1999, 2005, 2006, 2007, 2009, 2012, 2013, 2017, 2018, 2023). To be clear, those that use the analogy are also aware that AI is not coming from Mars, and they have said so. However, it is still worth examining this difference more deeply for secondary implications:

Human agency: It is part of the exogenic agency of advanced extraterrestrials to decide to make contact or to invade Earth civilization. Humans have some agency in terms of how we broadcast the news of our existence across the galaxy, but if an alien fleet or a natural hazard, such as an asteroid, is on its way to Earth there are few things we can do to prevent the potential hazard or threat, we can only find ways to mitigate, prepare and increase our resilience. In contrast, the arrival of advanced AI is an anthropogenic threat and opportunity. We may lose control at some point in the not-so-distant future, but for now humans can coordinate to decide if, when, how many, and how friendly “aliens” arrive.

Human-AI interdependence: When we imagine a first contact with aliens, we imagine a meeting of two autonomous, if not autark, civilizations. In contrast, when Ray Kurzweil uses his AI-alien disanalogy he particularly stresses that AI is increasingly deeply embedded in our infrastructure. This creates an increasing dependence of humanity on AI, which means that we cannot simply decide to “just stop AI” one day without destroying a lot of economic value and human lives. His personal vision is that AI is a tool to extend our mind and that we will merge with it (see exocortex analogy). At the same time, AI is also still very much dependent on humans for now, with the caveat that the nature of human-AI interdependence shifts over time from a strongly asymmetric dependence of AI on humans, to a strongly asymmetric dependence of humans on AI.

Gradual capacity evolution: In a “first contact” scenario we would expect a technologically mature civilization to communicate with and/or travel to Earth. Such an arrival may potentially occur in stages starting with scouts, followed by terraforming units. However, it would arguably not be a continuous process. While there is a prospect of discontinuous AI progress somewhere down the line the overall experience is much more gradual. It’s as if the “aliens” would send their “stupid ones” first, with a batch of slightly more intelligent and more autonomous “aliens” arriving each year. As Yuval Noah Harari argues “it's definitely still artificial in the sense that we produce it but it's increasingly producing itself, it's increasingly learning and adapting by itself, so artificial is a kind of wishful thinking that it's still under our control and it's getting out of control so in this sense it is becoming an alien force.” The counterpart to this is that AI is changing and improving at a fast rate, whereas aliens would likely come from more static societies. As a corollary of that, I would expect humans picturing popculture-inspired aliens to slightly overestimate today’s AI capacities and to drastically underestimate AI capacities in 50 or 100 years.

Decentralized, low latency, high bandwidth communication: Human-AI communication and interaction is happening decentralized, at low latency, and at high bandwidth with more than 100 million individual humans talking to ChatGPT on their own behalf. In contrast, in the case of a first contact with extra-terrestrials we would expect much more centralized communication on behalf of all of humanity with limited bandwidth and potentially very long latency. If we imagine a physical alien spaceship to visit Earth, we likely imagine the “alien ambassador” to be cordoned off, followed by attempts to communicate with political leaders or maybe the Director of the United Nations Office for Outer Space Affairs. This might have been the case in AI if advanced development would happen on a remote island as an “oracle AI” in a sandbox strictly isolated from the rest of the world and the Internet. In our time lime, it’s more like about every year or so a new, more advanced alien arrives and is welcomed by a bunch of tech nerds in their 20s and 30s at OpenAI,5 who teach it how to answer like a harmless, helpful, and honest human. Then we clone the alien a million times and have “first contact at scale” with everyone that wants getting a personal alien assistant.

Shared language and familiarity with human culture: One key challenge for any “first contact” with aliens is how to communicate and understand each other without having a shared language or even a shared grammatical structure. For example, the Arecibo message was an attempt at a universally decipherable message. Similarly, “whale SETI” is the attempt to study humpback whale communication to develop intelligence filters for the search for extraterrestrial intelligence. At least for now, AI is largely trained on human-generated data, and given tasks and goals that are intended to be useful to humans. As such, AI does not only speak all major natural human languages, but it is deeply embedded into human culture and in some sense more familiar with human culture than any human, given that large models are trained on a significant share of the overall cultural output produced by humanity and hence have a bigger cultural range than any single human. This deep familiarity with humanity will increase as humans have AI “friends”, “girlfriends”, and “boyfriends”, with whom humans are sharing their most intimate thoughts and feelings.

Access to and editability of AI connectome: Large neural networks are currently not understandable and not fully predictable. However, in principle they are transparent and editable. We have access to their full connectome, meaning we have giant CSV files with all the weights and connections of artificial neurons in the network. In some cases, this AI connectome is even shared open-source. For comparison, the first (and, so far, only) fully reconstructed connectome of a biological neural network belongs to the roundworm Caenorhabditis elegans. In almost all cases the flesh-and-bones aliens of human imagination do not have transparent thinking processes. Nor has transparent access to thinking processes been the case in intrahuman first-contact situations on Earth. We do not know enough about digital life at the moment to understand evolutionary forces between encrypted and transparent digital minds. Still, if we were to meet aliens, it is at least questionable whether they would just provide us with open access to their thinking patterns. This means that, if we were to develop a robust science of artificial neural networks and avoid recursive AI self-improvement, we would be able to ensure AI alignment with human goals to a much deeper degree than for aliens.

Persistence: The popcultural imagination of aliens tends to focus on the moment of first contact, and then if humanity succeeds an invading alien army goes home or humans make a few alien friends. In our case, success would mean designing aliens that we're going to coexist with for hundreds, thousands, millions, of years.

The origins of the association of Lovecraftian monsters with AI arguably go back further than the shoggoth meme. The first to combine Lovecraftian monsters with AI has been Alexander Scott in the 2014 blog post “Meditations on Moloch”, which had garnered a lot of attention in the effective altruism / rationalism community.

Fun fact, the US Air Force has been conducting an annual, humanitarian “Operation Christmas Drop” for pacific islands in Micronesia since 1952. In contrast, the cargo cult tribes live in Melanesia, such as on Tanna Island in Vanuatu, and they have been religiously worshipping the US Air Force for its cargo drops during Second World War for more than 75 years now. Yet, the US Air Force has never bothered to return.

I can highly recommend David Brin’s “Existence”. Also, here’s a tweet which is pretty funny if you have read the book.

Paul Scharre. (2023). Four Battlegrounds: Power in the Age of Artificial Intelligence. W. W. Norton

This was retweeted by Roon @tszzl, an OpenAI insider and potentially an alt account of CEO Sam Altman.