The Neural Metaphor: From artificial neuron to superintelligence

“Instead of trying to produce a programme to simulate the adult mind, why not rather try to produce one which simulates the child’s? If this were then subjected to an appropriate course of education one would obtain the adult brain. (...) We normally associate punishments and rewards with the teaching process. Some simple child- machines can be constructed or programmed on this sort of principle.” – Alan Turing, 19501

“Artificial intelligence is nothing but digital brains inside large computers. That's what artificial intelligence is. Every single interesting AI that you've seen is based on this idea.” – Ilya Sutskever, 2023

“I had always thought that we were a long, long way away from superintelligence. (…) And I also thought that making our models more like the brain would make them better. (…) I suddenly came to believe that maybe the things we've got now, the digital models, we've got now, are already very close to as good as brains and will get to be much better than brains. (…) Biological computation is great for evolving because it requires very little energy, but my conclusion is the digital computation is just better.” – Geoffrey Hinton, 2024

1. Summary

The computational metaphor, which frames computers as brains and brains as computers, has a long history. For example, the term computer first used to be a common job title for humans performing calculations. Furthermore, electronic computers were also referred to as “electronic brains” in the media in the 1950s. In fact, some even view the very term “artificial intelligence” as a part of this metaphor, arguing that it blends the machines with the concept of human intelligence as reference framework.2

The neural metaphor can be viewed as a subform or extension of the computational metaphor. Rather than blending digital computers with brains, the neural metaphor specifically blends artificial and biological neural networks. It too has a long history going back to 1943 when McCulloch and Pitts first described the artificial neuron. However, it has significantly gained in popularity relative to the more general computational metaphor since the deep learning boom.

Arguably, the computational and neural metaphors have had three major impacts on AI research and development:

The brain has provided a rich source of inspiration for AI algorithms and architectures.

Comparisons between the biological and digital computing power have helped to inform the scaling hypothesis and long-term predictions on AI.

Comparisons of digital computers and of human brains show that the former has higher theoretical upper capability limits, which has provided the grounding for the belief that artificial superintelligence is possible and likely.

To really understand the brain-AI analogy and its impact on AI research, we need to situate them within the context of different schools of thoughts in AI.

2. Two (and a half) schools of thought

Artificial Intelligence has existed since the 1950s as a field of research and development that was fundamentally defined by its goal of building intelligent machines rather than how to build them. Specifically, there have been two foundational approaches to building AI that reflect contrasting philosophies and methodologies in understanding and replicating human intelligence.

2.1 Logic-inspired

The logic-inspired or symbolist approach emphasizes logic, knowledge representation, and rule-based processing. It operates on the premise that intelligence can be achieved through the explicit encoding of knowledge and if-then rules about the world. Early proponents of this school include Allen Newell and Herbert A. Simon, who pioneered work in cognitive psychology and computer science, developing systems like the General Problem Solver. Symbolism was the dominant approach to AI in the 1980s with expert systems. It is maybe best exemplified by Cyc, a project to handcraft a common knowledge database with hundreds of thousands of concepts and millions of facts.

The logic-inspired approach is connected to the idea of innate knowledge in human brains. For example, Noam Chomsky has posited that the basic structure of all human languages (a “Universal grammar”) is embedded within the human mind at birth. Gary Marcus has extended that argument to make the case that our capacity to deal with symbols is not entirely learned from scratch but is supported by inherent cognitive structures.

2.2 Biology-inspired

The biology-inspired or connectionist school suggests that intelligence emerges from the interconnected networks of simple units (modeled after biological neurons). This approach seeks to replicate the brain's ability to learn from vast amounts of data through patterns, rather than through explicit rule-based reasoning. The development of artificial neural networks and deep learning comes from this school of thought. Prominent figures in this camp include Geoffrey Hinton, Yann LeCun, Yoshua Bengio, and Jürgen Schmidhuber, which are often referred to as the "Godfathers of AI," for their pioneering work in deep learning and neural networks.

The brain has been the key source of inspiration for the biology-inspired school of thought.

In the words of Geoffrey Hinton “I have always been convinced that the only way to get artificial intelligence to work is to do the computation in a way similar to the human brain.”3 Similarly, it is no accident that two out of three CEOs of the leading AGI labs (Demis Hassabis, CEO Google Deepmind; Dario Amodei, CEO Anthropic) have a background in neuroscience. They both deliberately studied both computer science and neuroscience because they thought that both are required to build artificial general intelligence.

This connectionist school of thought with its emphasis on learning, also has a particular focus on the brains of children. For example, the co-founder and president of OpenAI, Greg Brockman, very reliably (2017, 2019, 2019, 2019, 2019, 2021, 2023, 2023, 2023) refers to Alan Turing’s 1950 quote from that we cannot program an “adult AI” but need to let a “child AI” learn, to explain what motivated him to get into AI and to co-found OpenAI.

Or, as Yoshua Bengio argued: “we want to take inspiration from child development scientists who are studying how a newborn goes through a series of stages in the first few months of life where they gradually acquire more understanding about the world. We don’t completely understand which part of this is innate or really learned, and I think this understanding of what babies go through can help us design our own systems.”4

The relative popularity of logic-inspired and biology-inspired approaches in AI have differed over the decades. However, almost all the excitement for and investment in AI since 2012 has come from machine learning and neural networks. That’s what Ilya Sutskever meant in his quote above, almost everything that people call AI today has been developed using the methods put forward by the biology-inspired camp.

While no one completely denies the usefulness of the biology-inspired camp today. Critics still argue that the next wave of AI will have to use neural networks combined with logic. For example, Gary Marcus argues that biology-inspired AI and logic-inspired AI are like the “System 1” and “System 2” of the human brain, as hypothesized by Daniel Kahneman. A recent implementation of such a combination would be AlphaGeometry.

Importantly, deep learning is biology-inspired, it is not copying biology to the highest possible degree and there is an ongoing debate on whether AI still has to get closer to the brain or not. For example, Ilya Sutskever is convinced that artificial neural networks are “similar enough” to biological neural networks to achieve superhuman performance, and that focusing on residual ways in which they are different is almost a bit of a distraction. In March 2023, Geoffrey Hinton has come around to a similar conclusion (2023, 2023, 2023, 2023, 2024). This is why his personal timelines of artificial general intelligence have collapsed, why he has left Google to sound the public alarm about imminent AI risks, and why he has since been fighting for policymakers to accelerate their response to the rise of AI.5

Nevertheless, there is still considerable research on how to make AI more like the human brain. For example, there have been attempts at brain-inspired hardware with more neuromorphic chips, such as IBM’s TrueNorth, and Intel’s Loihi. Finally, it is worth mentioning that there is a camp that is not traditionally counted as an AI camp, but that still works towards artificial general intelligence and that literally tries to create a digital copy the human brain.

2.3 Whole brain emulation

Also called “mind uploading”. This is the most direct attempt to replicate human intelligence in silico. The emulation approach involves scanning the detailed structure of the human brain and recreating it as a computer simulation. The idea is that if you can accurately emulate the entire structure and function of the brain on a computer. The best-known project following this approach is the Human Brain Project, which aim to create a comprehensive simulation of the human brain. So far, progress in this direction still has been limited, and the whole brain emulation approach is often seen as an upper limit for timelines of artificial general intelligence in case we are unable to understand how to create strong AI by any other means. Nick Bostrom, Anders Sandberg, and Robin Hanson have written extensively on the implications of whole brain emulations.

3. Comparing computing power

Another major impact of the neural metaphor has been that it has provided the impetus for the belief that artificial neural network will get predictably more intelligent with more computing power, data, and parameters, and that it has provided a bio-anchor for long-term predictions in artificial intelligence.

3.1 Scaling hypothesis

The scaling hypothesis has become common wisdom in Silicon Valley around 2019 with “the bitter lesson” published by Richard Sutton. The scaling hypothesis is fundamentally tied to biology-inspired AI and in some ways a declaration that all handcrafted, logic-inspired systems will be replaced by bigger scale. In other words, there is no need for dozens of theoretical breakthroughs to scale software, we mostly need gigantic investments in AI compute clusters. The scaling hypothesis can be derived from empirical data on AI performance, however, analogy may still have played a role in the beginning. Ilya Sutskever has been one of the early advocates of the scaling hypothesis, and he argues that he has arrived there by analogy:

“the easy belief is that the human brain is big and the brain of a cat is smaller and the brain of an insect is smaller still and we correspondently see that humans can do things which cats cannot do and so on, that's easy. The hard part is to say maybe an artificial neuron is not that different from the biological neuron as far as the essential information processing is concerned. (…) Yeah, yeah, they're different yeah, yeah, biological neurons are more complex but let's suppose they're similar enough then you now have an existence proof that large neural nets, all of us, can do all these amazing things.”

3.2 Bio-anchors for AI capability timelines

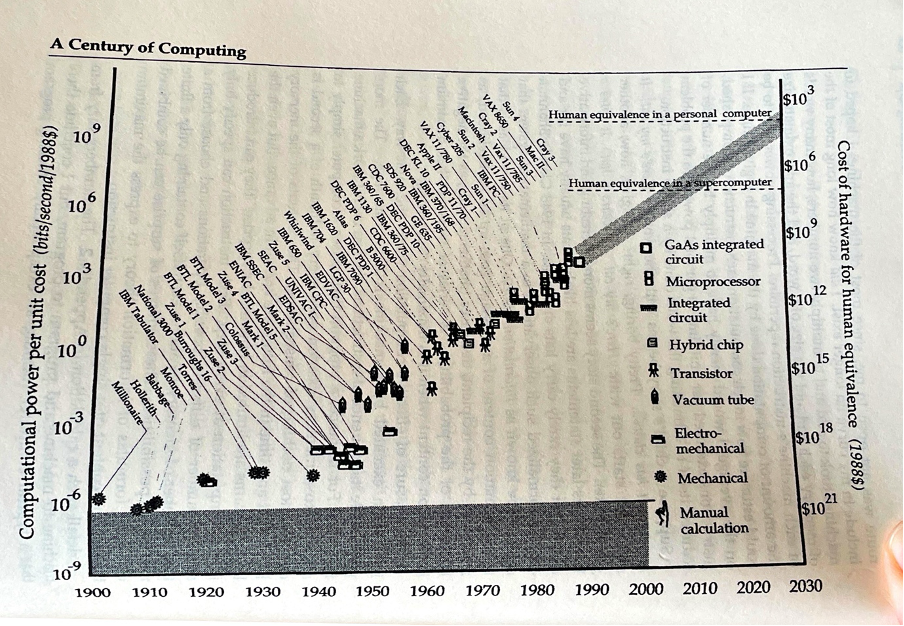

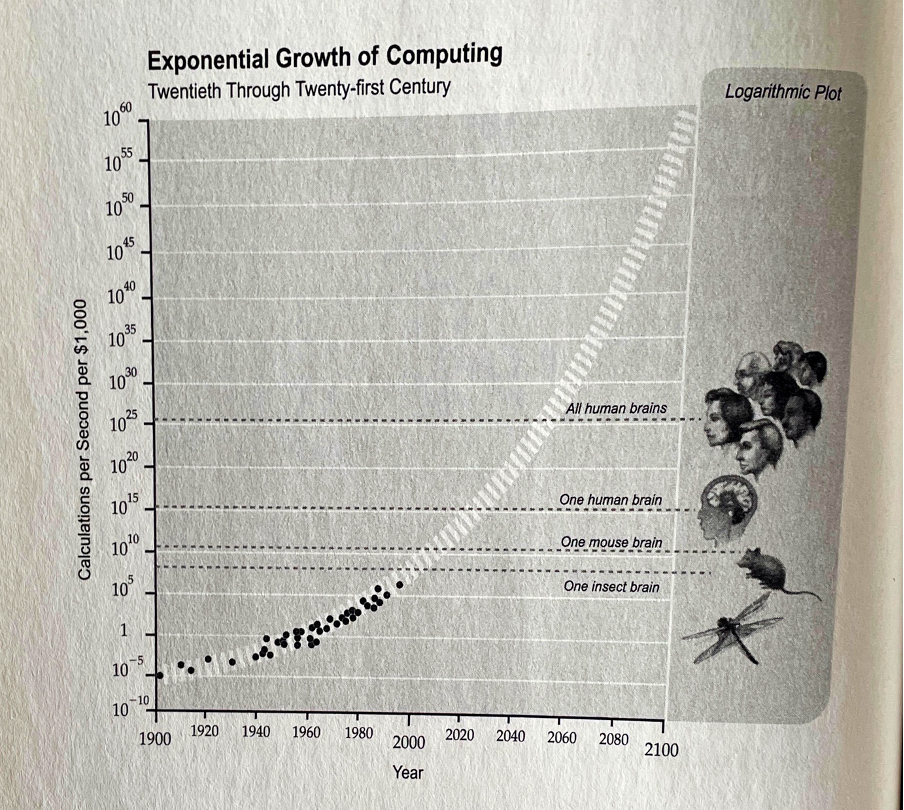

While the scaling hypothesis is specific to neural networks, there has been a longer history of projecting the long-term evolution of computing power and comparing it with the computing power of the brain as a “bio-anchor”. The assumption being that human brain equivalent hardware will eventually enable human brain equivalent computers. Even though, there might be a temporary “hardware overhang” before the software to fully leverage this is figured out.

The first use this methodology has been Hans Moravec in Mind Children (1988):

The most well-known example is in Ray Kurzweil’s The Singularity is Near (2005):

Humans tend to forget about belief shifts, but the near-term possibility of artificial general intelligence and superintelligence used to be a fringe view until fairly recently. As Brockman and Musk argued, those using bio-anchors had foresight in that they were amongst the earliest to anticipate the rise of AI. Bioanchors are still used to predict the future of AI. In a more recent example, Ajeya Cotra has created a draft report (2020) for OpenPhilantropy which has estimated the arrival of “transformative AI” using bio-anchors.

Fundamentally, bio-anchor predictions rely on three main assumptions that are quite reasonable all things considered:

analogy between digital computing power and natural computing power

intelligence increases with computing power

hardware industry keeps up exponential growth

The last point is key. The chip industry has been one of the most predictable industries in terms of output growth over the last 60 years. The industry is highly concentrated, resource-intense with high barriers to entry. It has plans 10+ years out and billions of investments in equipment, fabs etc. ride on these plans. In contrast, as a standalone, the higher layers of the AI stack where most of the attention has traditional gone, have been much less predictable.

4. Disanalogies

Disanalogies between the human brain and AI have been used both by AGI skeptics and those arguing there will be vastly superhuman AI. The former aim to “dehype” AI by showing that it cannot do something that the human brain can do. The latter sometimes highlight aspects in which AI is already ahead, but more importantly, they highlight that the physical upper limits for digital computation are many orders of magnitude above the human brain in many regards, and we therefore should not expect AI to stop improving at human-level artificial general intelligence.

4.1 Brain advantages to “dehype”

Highlighting things that the brain can do, and that AI can’t has been a common method to “dehype” artificial neural networks and argue that true AI is still a long way off. Current examples of brain advantages might include an intuitive understanding of physics, building internal world models, understanding compositionality, and understanding causality. At the same time, we don’t fully understand how large neural networks work, so some might argue that large neural networks might already build some kind of internal world model. It should also be noted that as AI has improved over time, the examples have shifted.

4.2 (Future) AI advantages as argument for “superintelligence”

We know that we are still far away from the physical limits on computing power, and that there is a lot of room for AI improvement. This disanalogy focusing on existing advantages or higher upper limits of digital intelligence has often been used to argue that we will eventually have vastly superhuman AI.

“A biological neuron fires, maybe, at 200 hertz, 200 times a second. But even a present-day transistor operates at the Gigahertz. Neurons propagate slowly in axons, 100 meters per second, tops. But in computers, signals can travel at the speed of light. There are also size limitations, like a human brain has to fit inside a cranium, but a computer can be the size of a warehouse or larger.” – Nick Bostrom, 2015

To continue and learn about the concrete ways in which the neural metaphor applies and where it falls short. Please read the sequence: “AI vs. human brain: 14 commonalities and 21 differences”.

Alan Turing. (1950). Computing Machinery and Intelligence. Mind 49, 433-460. p. 456

William Hill. (1989). The mind at AI: Horseless carriage to clock. AI Magazine. p. 33

Max Bennett. A Brief History of Intelligence: Evolution, AI, and the Five Breakthroughs That Made Our Brains. p. 6

Martin Ford. (2018). Architects of Intelligence. pp. 19-20

This is a great example of how powerful a shift in the core analogies or metaphors that a person uses as a heuristic to make sense of the future AI can be.